Sensor Hardware and External Software Setup

Setting up the environment for use with NeuroPype

This section discusses how to set up the environment in which you use NeuroPype, including the hardware connectivity and the user interface.

Sensor hardware

The NeuroPype server works out of the box with a broad spectrum of sensor hardware, including EEG devices, eye-tracking devices, motion-tracking devices, human interface devices, and multimedia devices. Most of this is made possible by the Lab Streaming Layer (LSL), a unified interface network interface to various kinds of hardware (as well as software that sends or consumes time series). To use a device that supports LSL with NeuroPype, usually all you have to do is launch the vendor's program to acquire data from the device, and possibly enable streaming over LSL in the application (unless streaming is always enabled anyway). If the vendor does not offer LSL support in their application, you can try to find an open-source client for the vendor's hardware, many of which are available from ftp://sccn.ucsd.edu/pub/software/LSL/Apps/ (mostly Windows binaries) or https://github.com/sccn/labstreaminglayer (source code). A small subset of the LSL distribution is also included with the Windows installation. We will go through some examples in the following, here mostly focusing on EEG.

Once streaming has been enabled, the device will then publish a data stream on the network, under a vendor-specific name and with a content type that is EEG (in case of EEG), or Audio, Mocap, Gaze, among others for other modalities (a list of some common content-type names can be found on https://code.google.com/p/xdf/wiki/MetaData).

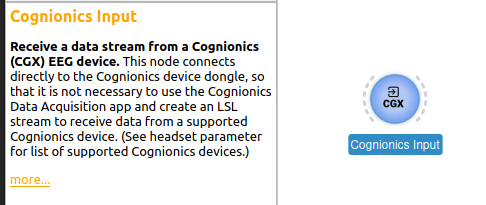

Cognionics

Neuropype has built-in support for connecting directly to most Cognionics (CGX) devices without the need to run the Cognionics Data Acquisition software. In this case, instead of creating an LSL stream and using the LSL Input node to capture the data, instead use the Cognionics Input node (found in the DeviceIO category), and select the headset type (or 'auto' for automatic selection which should work in most cases). Make sure that the Cognionics Data Acquisition software is not running, as it will lock the dongle and Neuropype will not be able to access it directly. Then run your pipeline and Neuropype should connect to your headset and capture the data. Follow the Cognionics Input node with other processing nodes (FIR Filter, etc.) as usual.

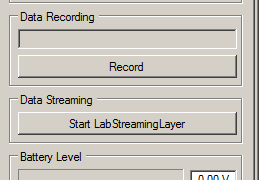

Alternatively, you can run the Cognionics DAQ application, connect to the device as per the vendor's instructions, and then click the "Start LabStreamingLayer" button on the right-side pane to create an LSL stream. In that case, you would use the LSL Input node configured to that same stream name.

InteraXon (Muse)

If you own an InteraXon headset (for instance, the Muse), and you have downloaded and installed their Research Tools package, you will find a program called muse-io in the application folder (this was tested on Windows and Mac OS). To enable LSL streaming, open a terminal window, cd into the Muse application folder, and type (where mystream is an arbitrary stream name you can assign):

> muse-io --lsl-eeg mystream

This should show you whether the connection to the hardware was successful, and then start streaming.

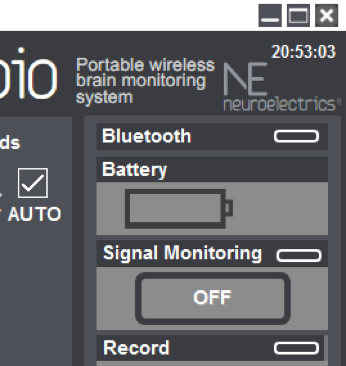

Neuroelectrics (Enobio)

If you have Neuroelectrics hardware (here for the ENOBIO headset), you can open their acquisition program, wait until Bluetooth has paired (the indicator next to Bluetooth turns on), and then under Signal Monitoring, click the big button labeled initially "OFF". This should enable the streaming.

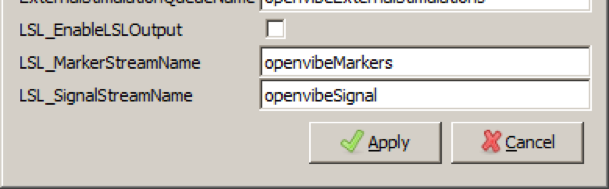

OpenViBE

OpenViBE has a cross-vendor acquisition program that works on Windows/Linux/Mac and supports 25 device classes, most of them EEG, including medical hardware (e.g., CTF/VSM MEG, Micromed, MindMedia, Mitsar, TMSi) that is not supported by other clients. To use hardware supported by OpenViBE, you need to launch the Acquisition Server program, click on Preferences, check the box LSL_EnableLSLOutput, and click Apply. This only needs to be done once. Then, select your hardware in the pull-down menu (note the LSL item is not related to streaming, but is reading from another LSL source), connect to the device as described in the respective OpenViBE documentation, and you should be able to see the stream.

Other LSL clients

Numerous other LSL clients (mostly for Windows) can be found at ( https://github.com/sccn/labstreaminglayer/wiki/SupportedDevices.wiki), which covers many of the major EEG vendors, as well as motion-capture, eye-tracking, multimedia and HID hardware. In some cases, if you have trouble connecting with the vendor's program, or with OpenViBE, you may try one of these alternative clients.

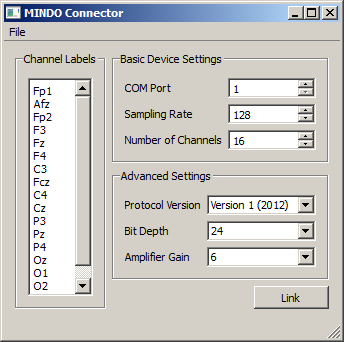

Most of these clients have a similar user interface, which will be shown here using MINDO headsets as an example, which can be obtained from the official LSL distribution ftp mirror site ftp://sccn.ucsd.edu/pub/software/LSL/Apps/. While some clients require that the vendor's native program is running in the background and streaming over the vendor-specific protocol (so that the LSL client can pick it up), some clients connect directly to the hardware. In the case of MINDO Bluetooth headsets, the headset first needs to be paired via Bluetooth (as per vendor instructions), and then the particular device settings can be entered (and saved under File / Save configuration), such as the channel labels, COM port to use, sampling rate, number of channels, protocol version to use, etc. The COM port can be looked up in the Bluetooth device properties. Once all settings are typed in, you can click the Link button to start streaming. If this was successful, the button will turn into an Unlink button that can be pressed to stop streaming (if you get an error message or it does not change into unlink, communication with the device is likely not possible for one or another reason). If the program seems to "hang" while streaming (e.g., battery died), you can check that by clicking unlink, which should immediately turn the button into Link -- if it does not do that, you know for sure that the program is hung.

Network setup

When using LSL please keep in mind that LSL is a local communication network, i.e., it does not work out of the box across networks that involve more than one router (although hubs are fine). While it is possible to set it up to work in such environments, a configuration file needs to be present that stores the hostnames (or IP addresses) of the known peers (this is documented in more detail on the LSL site). For internet-scale communication between the sensor hardware and the application talking to the hardware, other protocols such as MQTT should be preferred.

Setting up external user frontends to interact with NeuroPype

Often the NeuroPype server is used to provide the computational engine behind some user-facing applications, like a program to control a drone by brain signals, or an app that attempts to track the user's mood based on EEG, etc. In other cases, one wants to precisely control the user experience in a way that is synchronized with brain / biosignals being collected for immediate or later processing. Such synchronization of user interface actions and data collection is often needed to capture "calibration data" (sensor readings collected under specific known user states, like thinking "up" vs. "down", being concentrated vs. relaxed, happy or sad, etc.) -- especially when precise timing matters, like trying to record the characteristic brain signature while the user presses a wrong button). Specifically when using EEG, calibration is more important than with other sensor signals, because the way in which any given signal is embedded in the data is usually very person-specific. This section explains how to accomplish these things with user interface programs that are separate processes from the NeuroPype engine, possibly written in other programming languages.

Receiving control signals from NeuroPype

In almost all applications one wants to obtain some output signal (e.g., a control signal that contains the steering directions for a drone) from NeuroPype. One approach is to make a custom node for NeuroPype that sends out the control signal via TCP or UDP using a protocol of one's own choosing, and then set it up to connect to one's program. This may be necessary when one has no control over the user interface software and must use a particular communication protocol. However, the by far easiest way to accomplish this is using LSL, which hides all the connection establishment and protocol matters behind a lightweight cross-platform library (liblsl) that makes it trivially easy to receive signals from a remote source. Interfaces using this library exist for a wide range of programming languages, including Python, C, C++, C#, Java, or MATLAB. The following example shows how to receive and print a control signal that is produced by an external NeuroPype engine process running on the same computer or local network in Python. By adding just four LSL-specific lines to an existing program, one can integrate a brain-controlled signal:

# Sample Code

from pylsl import StreamInlet, resolve_stream

streams = resolve_stream('type', 'Control')

inlet = StreamInlet(streams[0])

while True:

print(inlet.pull_sample())

Note that it is not necessary to give a hostname or IP address, since LSL generally auto-discovers its stream on the network. The following listing shows the same functionality in C#, which could for instance be in a 3d game using the Unity engine:

// Sample Code

using System;

using System.Threading;

using LSL;

namespace ReaderExample {

class Program {

static void Main(string[] args) {

// wait until a Control stream is found and open an inlet

liblsl.StreamInfo[] found = liblsl.resolve_stream("type", "Control");

liblsl.StreamInlet inlet = new liblsl.StreamInlet(found[0]);

// read a 2-channel control signal

float[] sample = new float[2];

while (true) {

inlet.pull_sample(sample);

foreach (float f in sample)

System.Console.Write("\t{0}",f);

System.Console.WriteLine();

}

}

}

}

Similar examples are available for other programming languages at https://github.com/sccn/labstreaminglayer/wiki/ExampleCode.wiki. In a similar vein, one can also send a control signal to NeuroPype to be analyzed together with some other biosignals, although this is a relatively rare use case.

Tagging events for use by NeuroPype

The other important use case, that of tagging events in time so that NeuroPype can analyze signals time-locked to those events, can also be achieved in just a few lines with LSL, again from any of the supported programming languages and operating systems. In a nutshell, whenever a particular event happens (e.g., user pressed button), a so-called "event marker" can be emitted into LSL, as follows (here shown just in Python):

# Sample Code

from pylsl import StreamInfo, StreamOutlet

info = StreamInfo('MyStream','Markers',1,0,'string','myuniqueid')

outlet = StreamOutlet(info)

outlet.push_sample(['myevent1'])

This program declares a stream with content-type Markers (named MyStream, one channel, irregular timing, string values, and an arbitrary uid), opens an outlet (at which point the stream is made visible to others), and then sends an event into it. The event is time-stamped at send time (using a clock that is synchronized over the network with that of other streams). To push more markers at later times the same same outlet should be kept and reused (as destroying it would remove the stream from the network). The same can also be accomplished using custom protocols over TCP/UDP/IPC, by adding a custom node to NeuroPype that can accept such connections. When using closed-source software that shall emit user interface events with identifiable timing (say, GameMaker 2000), one might try to write to a logfile and correlate the time stamps after the fact, or use other tricks to get the event out of the software (for instance, play inaudibly high-pitched beeps, and record system audio together with other streams).

See Calibration Marker Generation for another simple example script that sends markers.

Copyright (c) Syntrogi Inc. dba Intheon. All Rights Reserved.